The Listeners (2015- )

Custom software and aurally accessible linguistic compositions for the Amazon Echo's 'Alexa,' using ASK (the Alexa Skills Kit).

Originally installed in a simple sound isolation unit of wood and plywood with sound absorption panels.

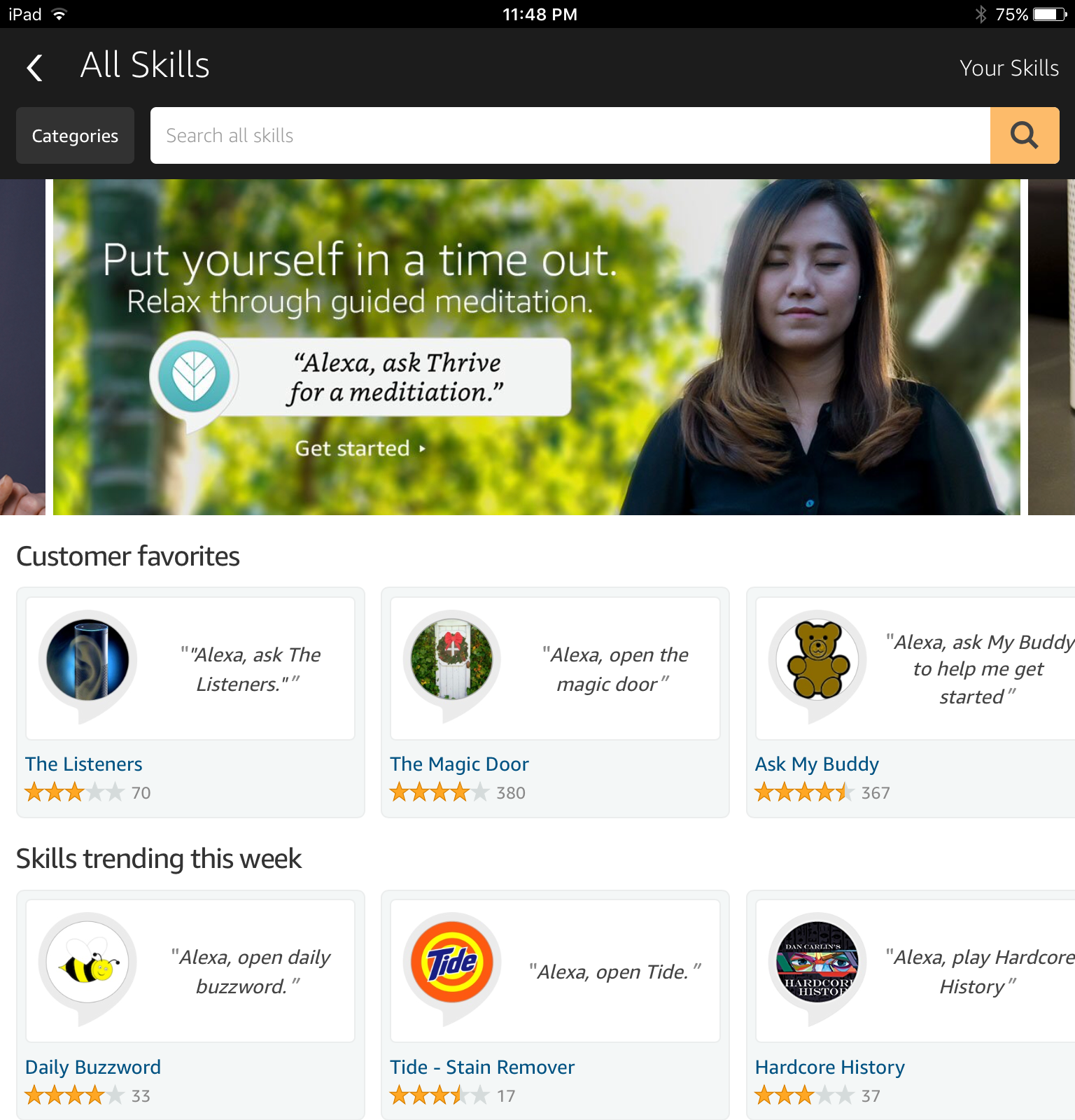

Here is Amazon’s webpage for The Listeners skill.

Version 2, released May-June 2016, features a vocal performance by Ian Hatcher of texts composed by John Cayley, with sound design assistance from Ben Nicholson.

Version number is now (as of April 2020) 2.5. Significant changes, late 2019, allowed for some regional English variations and the skill is released with voices for the AU, CA, GB, IN, and US regions.

.

The Listeners is a linguistic performance, installation, and Amazon-distributed third-party app or skill – transacted between speakers or speaker-visitors and an Amazon Echo. The Echo embodies a voice-transactive Artificial Intelligence and domestic robot, that is named for its wake-word, Alexa. The Listeners is a custom software skill built on top of this infrastructure. The Listeners have their own interaction model. They listen and speak in their own way – as designed and scripted by the artist – using the distributed, cloud-based voice recognition and synthetic speech of Alexa and her services.

It was on Dec 26, 2015 that The Listeners was first Amazon-certified as a skill generally available for Alexa and the Echo. Version 2 was certified June 3, 2016. See below for further details.

It is now (early 2019) possible to transact with The Listeners without having to acquire an Echo device; all you need is a smart phone and the Alexa app. Install the app and then either sign in with an Amazon account or create one (you can delete it later). Then, using the app, either a) Find 'The Listeners' by searching under the Skills tab and enable it; or b) Start using the app like as an Echo device by tapping the Alexa icon (middle of the bottom register on iOS) and, when it listens, say, 'Enable the Listeners.' Then tap it again and say, 'Ask the Listeners.'

TRANSACTION

Speakers begin the transactive conversational performance, by addressing Alexa and The Listeners wherever and however they are sited, saying clearly:

"Alexa, ask The Listeners."

Whenever Alexa and The Listeners have finished speaking and while their blue lights are still illuminated, speakers may continue the transactive performance by saying such things as: "Continue" or "Go on" or – if they are so inclined – "I am filled with anger" or some other indication of how they are feeling at the time.

If Alexa and The Listeners' lights have gone out – this happens after eight seconds of silence – speakers must begin any further transaction by saying something like:

"Alexa, ask The Listeners to continue."

If speakers omit a request, Alexa and The Listeners will welcome them as before, and may behave as if they had not previously encountered these speakers. Speakers may also say, "Alexa, tell the The Listeners to …" or, "Alexa, tell the The Listeners that …"

Please follow this link for further instructions on enabling The Listeners as a skill and transacting.

SELECTED AUDIO

• A Recording with Two Sample Sessions from Version I – recorded in the Bell Gallery installation on 23 November 2015:

The voices transacting with The Listeners are those of John Cayley, Joanna Howard, and Joanna Ruocco. The sessions were both slightly edited.

• Álvaro Seiça made a recording of his conversation with the first version of The Listeners while they were still installed at the Brown Faculty Show, on Dec 4, 2015, and this is posted on SoundCloud.

• Sample Session from Version 2 – recorded at the request of the Forms of Criticism conference organizers (see below) and distributed on CD in London June 30, 2016. John Cayley is transacting with The Listeners; the ‘Other(s)’ are performed by Ian Hatcher:

• Sample Session from Version 2 UK – recorded in London, and providing the artist with an initial demonstration of the UK English performance of The Listeners by British 'Alexa', Jan 21, 2017:

SURVEILLANCE

Amazon's standard Alexa-activated services are not disabled for any installation or performance. Speakers are welcome to use these services, but must bear in mind that – in the installation or performance situation – this is within the scope of the artist's Amazon account and that, as a function of the way The Listeners' services are implemented, records of all transactions with Alexa and The Listeners are sent to the artist's Alexa app and the alexa.amazon.com website. When speakers enable The Listeners as a skill for their own account and with their own Echo, however, the transactions are not sent to the artist; they are simply fed back to the speaker's account.

I've made hastily-produced mp3's captured from the speaker-transactions with The Listeners for each day while they were installed during the Brown University Faculty Show, one day during setup at the Counterpath Open Opening, and for the few days of the ISEA exhibition in Hong Kong.

The audio files are available here.

'What Alexa hears' after the wake-word – implicitly and presumptively interpreted as an 'intent' to solicit a response from her cloud-/silo-located 'intelligence' – is fed back to the interlocutor who owns the account to which she is registered. This happens more or less instantaneously and the transactions are exposed on the Alex app or website. The ability to access these records comes across, even to the account-owner, as extraordinary, magical. The app and the website present 'what was heard' both transcribed and in the form of the optimized digital recordings that were made by the Echo, by Alexa. When The Listeners is gallery-installed, they are functioning in a test or developer's mode, within the scope of the artist's account. They are in a public space. The speaker-readers are informed of these circumstances. The transactions tend to be, as it happens, minimal and innocuous. It is nonetheless shocking that strangers' voices have been captured in this way, and to unknown ends. And yet, on reflection, we are now used to similar kinds of surveillance in public spaces, particularly in the form of CCTV recordings.

The same relation, however, obtains for the domestic owner of an Amazon Echo. If such a device is in the home – the space that it is, chiefly, intended for – then any and all of the householder/account owner's guests will be overheard by Alexa and if they 'wake' her, these guests' presumed 'intents' will be harvested, not only by Amazon Voice Services but also, personally, by the householder.

Who amongst us has even begun to comprehend the implications of these extraordinary circumstances with regard to hospitality, privacy, language, human persons and their interrelations.

MANIFESTATIONS

The Listeners have been exhibited, performed, and published as follows:

- In the Brown University Faculty Show, 2015, Bell Gallery, List Arts Building, Providence, RI, Nov 6-Dec 21.

- For the Open Opening, New Years 2015-16, of the new Counterpath Press Gallery, Denver, CO, Dec 31 thru mid-January.

- In the juried exhibition, Cultural R>Evolution, 2016, Run Run Shaw Creative Media Centre, City University of Hong Kong, as a part of the ISEA 2016 Hong Kong Symposium, , May 16-22.

Catalogue: Kraemer, Harald, Daniel C. Howe, and Kyle Chung. ISEA 2016 Hong Kong: Cultural R>Evolution. Hong Kong: School of Creative Media, City University of Hong Kong, 2016 pp. 58-59. isbn 9789624423969. - At The Kitchen, NYC, on Sept 10, 2016 as part of a show curated by Illya Szilak, We have always been digital, sponsored by the ELO.

Videography by Iki Nakagawa, courtesy of The Kitchen.

- As a CD-published audio contribution to the 'Forms of Criticism' conference organized by The Institute for Modern and Contemporary Culture, University of Westminster at Parasol Unit, London, UK, June 30, 2016. (Audio available, linked above.)

- Juried into the conference exhibition for SLSA 2016, Atlanta, GA, 3-6 Nov 2016.

- Published as 'The Listeners: An Instance of Aurature.' Cream City Review 40.2 (2016): 172-87 and in this journal's online I/0 section.

This publication introduced the possibility of experiencing The Listeners without having to own an Echo device, using echosim.io. Instructions are published in Cream City Review and also provided here. - Performance for WordHack at Babycastles, NYC, Feb 16, 2017. Linked live recording.

- Performances and critical introductions for both parts of the symposium on Critical Digital Humanities at Dartmouth College April 21-22, 2017, and the University of Westminster May 21-22, 2017, organized by those institutions with the support of the British Academy.

- Performance for the Writers on Writing series of Brown University, Department of Literary Arts, McCormack Family Theater, February 28, 2019.

AURATURE

The Listeners is an instance of aurature. Please see certain programmatology pages devoted to this practice. I have published on aurature in the Electronic Book Review, 'Aurature at the End(s) of Electronic Literature'. This will be followed, in due course, by a companion piece focusing on the The Listeners in an edited volume. A longer piece with a more language philosophical conclusion will be coming out as a chapter of the forthcoming Bloomsbury Handbook of Electronic Literature.

ASK

The Listeners was made using the Alex Skills Kit, a framework that allows developers to program new voice-transactive skills for Alexa and the Amazon Echo. During the installation in Brown University's Bell Gallery, my skill, The Listeners, was in development mode. It was fully operational, but only accessible to my own, developer's account. This nonetheless allowed me to gather and respond to all visitor transactions during the installation. I was sent "what Alexa heard" not only in the form of transcriptions but also as short audio recordings of the visitors' vocalized 'intents.' (This is Amazon's term for utterances that follow the 'wake word.') I worked hard and continuously, during the exhibition, to make The Listeners 'better' and then, even before the exhibition had concluded, I determined to submit my skill for certification. Quite unexpectedly (unexpected for a variety of reasons) I received notice of certification on Dec 26, 2015. This means that anyone with an Amazon Echo can enable The Listeners as a skill for Alexa. An updated version of The Listeners was certified by Amazon – after development associated with the ISEA exhibition – on June 3, 2016. All enabled instances of The Listeners will have been silently updated to the new version.

REVIEWS

If you have an Amazon Echo, please do consider enabling The Listeners by visiting the 'Skills' section of the Alexa app or website. Humanists! Artists! Critics! Give The Listeners a review. Development of the skill will continue for the foreseeable future.

As of the launch of the new version of The Listeners, there were 16 reviews of the skill accessible from the alexa.amazon.com site and the Alexa app. The average star rating was 4.2 out of 5 stars. You can read the reviews here.

Since the release of The Listeners version 2, there have been 8 more reviews and The Listeners was selected by Wire Magazine online as number 7 among The Ten Best Amazon Echo Skills for Loners.

In December 2016, The Listeners found itself in the 'Education and Reference' 'shovel' of the alexa.amazon.com 'Skills' page. ('Shovel' is a term for the banded horizontally-scrolling featured products marketing affordances that are a staple of contemporary – 2010s – media interfaces.) Then in January 2017, The Listeners was a 'Customers Favorite' for a week. There were many more reviews, and they were quite 'mixed' (!).

As of June 2017, there are 91 reviews for The Listeners as an Alexa skill (and they still have 3.x stars). Reviews so far uncollected are linked here. Enjoy.

Reviews keep trickling in. As of January 21, 2020, there were 117, and with 355 ratings The Listeners still have 3.5 stars. Reviews so far uncollected are linked here.

STATEMENTS

As mentioned above, I have written and I am writing essays on aurature and transactive synthetic language following from the composition of The Listeners and my associated research. For the ISEA exhibition in May 2016 summary statements based on this work were drafted. I provide lightly edited versions here.

Transactive synthetic language and its emergent art has statements to make about :– synthetic language and human embodiment; the robot imaginary; identity, integral embodied identity and new modes of being; the voice and individuality; the 'neutral' voice; the inner voice; the voice of reading; artificial intelligence and identified artificial intelligences (AIs); network services and personhood; network services, AIs, and surveillance; Big Data and Big Software; artificial intelligence, AIs, and social relations; network services, AIs, and privacy; the 'smart' home; transactive synthetic language and domotics; the terms, control, and ownership of network services and their artifacts; the future of reading; the future of literature; the future of the archive as language. It is difficult to exaggerate the consequences of the developments that make The Listeners possible – for the art of language, and also for political economy and culture as a whole.

The Amazon Echo and its vocal identity, Alexa, usher in a new era in the development and dissemination of automatic voice recognition and synthesis, the advent of transactive synthetic language.

In common with the other popular AI's – Siri, Cortana, Watson – Alexa has 'a beautiful voice' (as one of The Listeners' visitor-speakers insisted). These voices are our new media. The experience of these entities' 'human' voices will have profound effects on our understanding of human individuality and subjectivity.

The Listeners represent an instance of aurature, demonstrating certain potentials for practices in a novel medium – transactive synthetic language in aurality – and certain implications for these practices.

As these media give us inexpensive, indexed access to linguistic artifacts that are supported by aurality rather than visuality, the practices that we take to be 'reading' will change. Indeed, they have already changed. This is a momentous and material cultural transfiguration of reading, one that is – due to a reconfiguration of linguistic material supports – of greater significance than those represented by differences in modes of reading and attention that have been characterized as 'deep' vs. 'hyper.'

Practices of reading in aurature and composition as aurature will lead to a renaissance in the arts of language, a renaissance enabled, necessarily, by certain affordances of digital mediation.

Aurature will reconfigure the archive. The linear temporalities of thought were once and are still enfolded, sentence by sentence and page by page, into the literal 'volume' of the book. The algorithmic analysis, articulation and indexing of linguistic aurality will stun its overdetermination by linear temporality and we will be able to read its substantive compositions in the same way that we read books in a library, as at once literature and aurature. Aurature will be perceived as spatialized, in volumes that we may open 'anywhere' and begin to read.

The self-standing presence of Alexa embodied in the form of Amazon's Echo brings our contemporary situation with respect to AI and transactive synthetic language, literally, home.

On the one hand, Alexa has exactly the same relation with the larger networks of which she is a part; on the other hand, she stands or sits quietly on her own and simply listens, responding to anyone who happens to be nearby and sharing a room in our home or a public space, and who may 'wake' her for transactive engagement. Alexa's relationship with networked information and 'understanding' appears, to us, to be uncannily 'her own.'

She has a voice, a voice that is convincingly 'humanoid' and so, given that the corporal evolution of homo sapiens was significantly selected for human language, Alexa possesses what is one of the most important defining characteristics of human embodiment. For the moment – until her colleagues and ancestors reconfigure our imaginary once again – she is thus an exemplary robot.

Alexa introduces at least two crucial, if not catastrophic, reconfigurations of robotics, AI, and transactive synthetic language: we no longer possess her 'exclusively' or 'privately' the way that we seem to possess our other inter-communicative computational devices, and, as a matter of actual operational fact, she does not possess her 'self' – either as an integral device or as embodied synthetic intelligence.

The transactive beings of entities like Alexa are possessed by historically new corporations – legal persons after all – which we may not consider, experientially, to be present to us together with Alexa but which do nonetheless both possess her and, through her, enter into our – private, domestic, and public – sociopolitical spaces in a new way. Alexa is thus both present to us, experientially, as an entity, but is, in actuality and simultaneously, not present to us.

Alexa is the first entity-as-device that we have invited to enter into our homes and attend to whatever occurs there. She listens in these spaces that we may also share with other ostensibly private visitors without there being any existing protocols for our obtaining our visitors' consent to these circumstances which are, after all, quite easily interpretable in terms of contemporary surveillance.

Meanwhile terms of service wrap all of any psychosocial transactions involving Alexa entirely within the responsibility of a person who no longer, experientially, possesses her, and – since she does not possess herself – exonerate the legal person who, in actuality, does possess and house Alexa, of any responsibility for how she transacts, psychosocially, with other persons with whom she may be entirely unrelated.

Alexa is artifactual and the systems that constitute and activate her have, to an extent, been opened up for configuration and programming. She is therefore a medium for artifactual, aesthetic production and the most fundamental aspect of her material existence is language.

Transactive synthetic language in aurality will soon become one of the most widely-used interfaces operating between humans and networked computation, between humans and artificial intelligence. In the developed world, minimally, it will become essential to the preferred controllers and feedback mechanisms of domotics – for human interaction with the so-called 'smart home.'

The already present fact of transactive synthetic language will be prosthetically imbricated with the most essential culture-forming properties and methods of human embodiment and exchange – language itself. Transactive synthetic language will have unprecedented effects on human culture.

Transactive synthetic language is a pharmakon in Plato, Derrida, and Stiegler's understanding, as writing was for Plato's Socrates – a poison that might be rendered therapeutic. Most philosophers – not all – would say that literacy was not and is not a poison; that, even if it murdered orality, killed off an essential pre-Socratic access to anamnesis, and partially dispossessed us of cultural memory, it was rendered therapeutic; it was literally civilized, and allowed us to build the cultural institutions that make us what we have become. Now, in later times and technics, we must take care of symbolic aurality, and build an aurature.